Xianwei Zhuang

About Me

I am a second year graduate student majoring in computer science at Peking University, mainly focusing on foundational large model, including the pre/post-training and RLHF of large vision models (VLM).

Research Interests

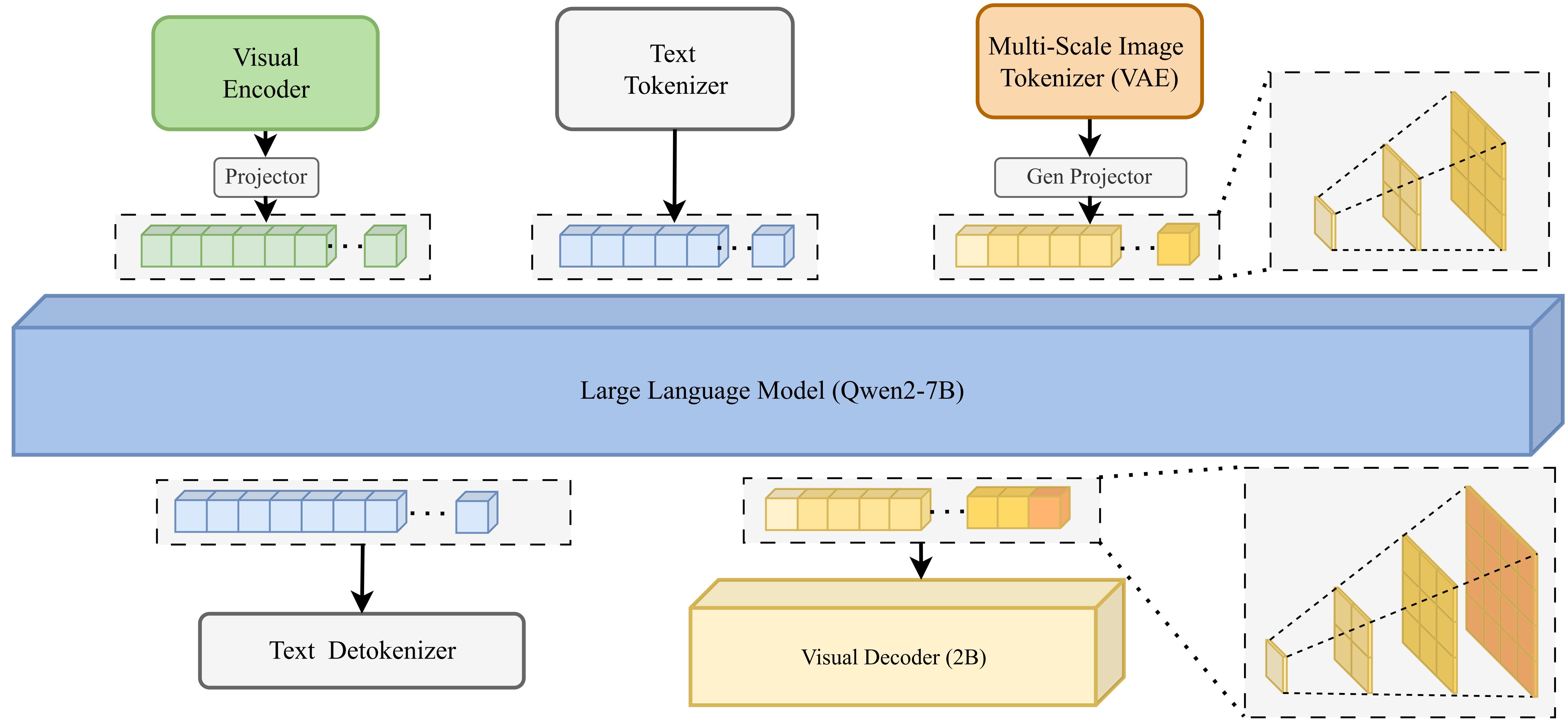

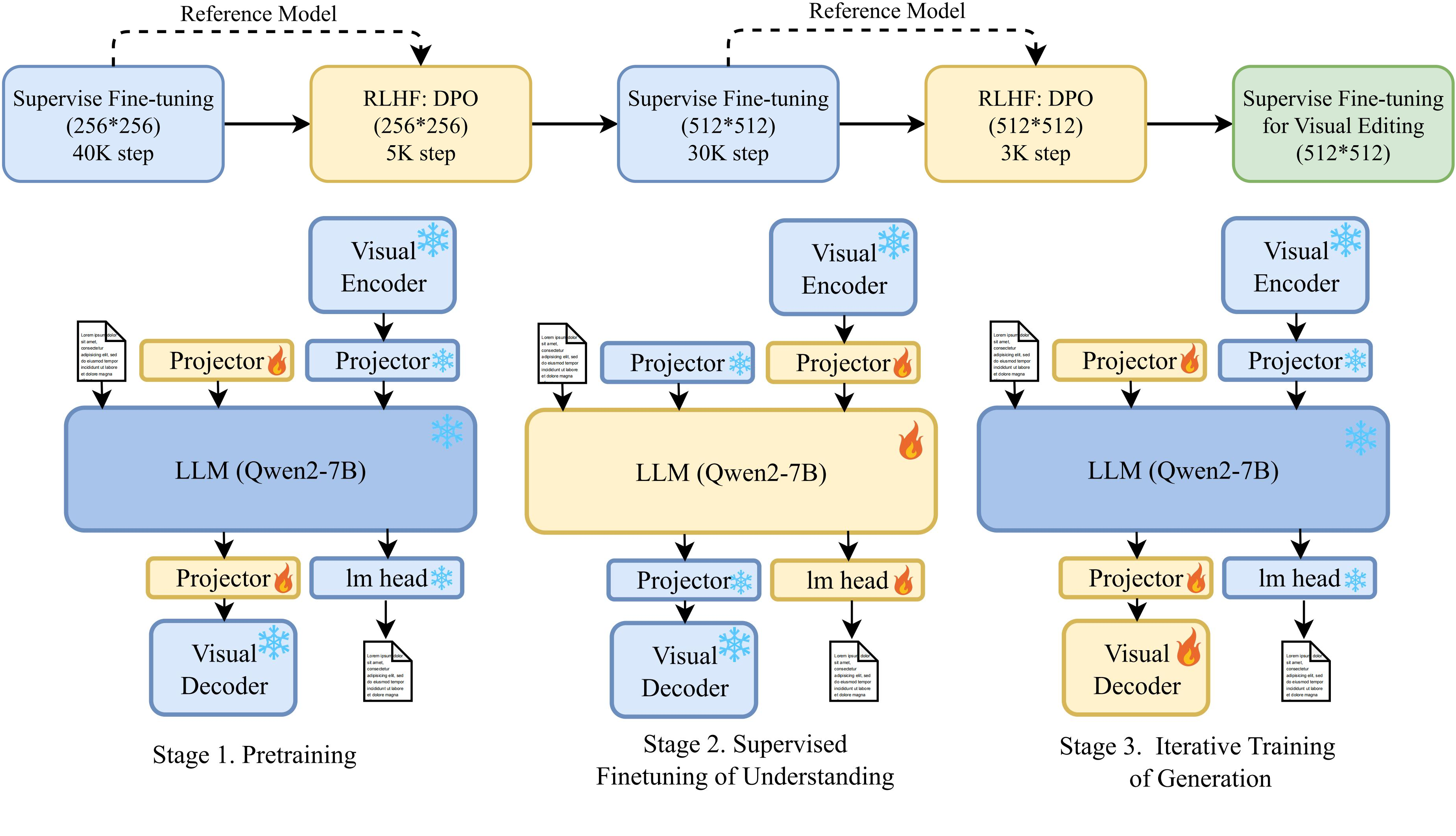

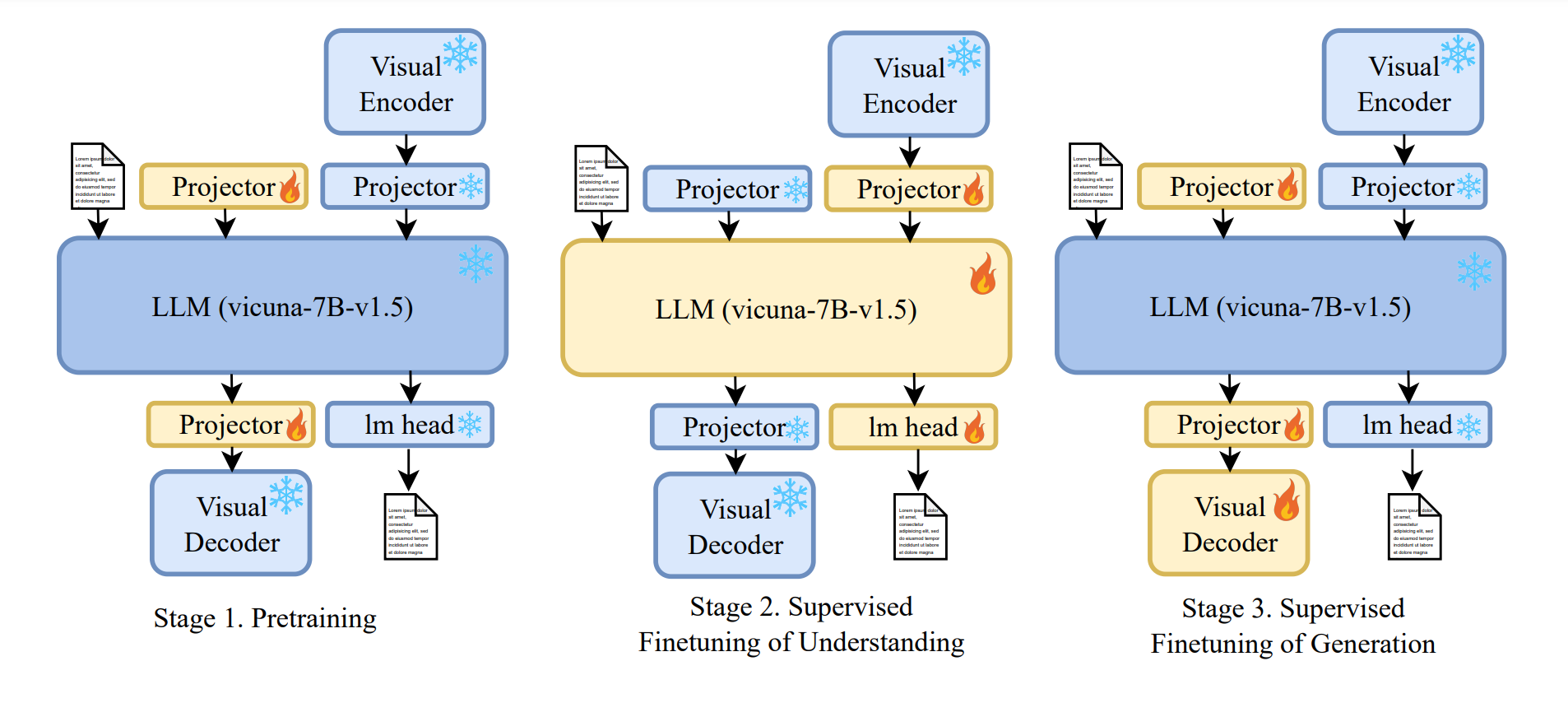

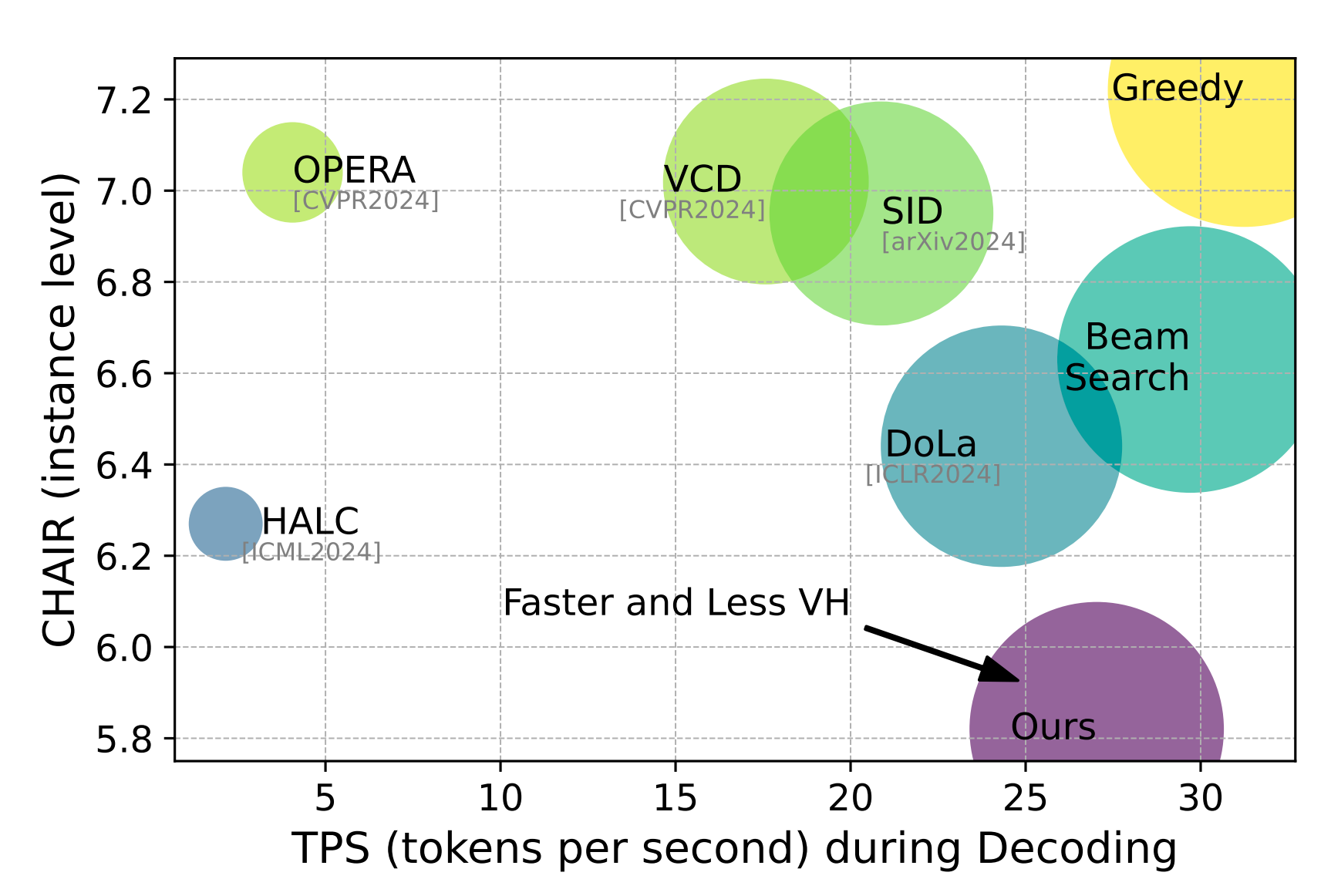

- Multimodal Large Language Model: Unified Understanding and Generation, SFT, RLHF, Reasoning [QwenVLo, VARGPT]

- Large Language Model: Long Context [Doubao]

Experience

Educational Experience

- Peking University: Pursuing a master’s degree starting from 2023

- Zhejiang University: Obtained a Bachelor’s degree from 2019 to 2023

Intern

- Qwen Team, Foundation Model, Tongyi: Core contributor in the 0-to-1 development of Qwen VLo, participating in data synthesis, coding, pre/post-training, and RL.

- ByteDance: Focus on studying long context of LLM and long-term memory of Doubao

Some honors

- Received the National Scholarship at Peking University in 2024

- Received Zhejiang Province Outstanding Graduate and Zhejiang University First Class Scholarship at Zhejiang University

News

- [Jun. 2025] Our QwenVLo preview version can available for you to experience at QwenVLo.

- [Apr. 2025] Open source training code and models of VARGPT-v1.1 at github and Huggingface. Total GitHub stars exceed ~600.

- [Feb. 2025] Our works VASparse is accepted for CVPR 2025. Work is continuously being updated at github .

- [Jan. 2025] Our code, model and paper about unified understanding and generation VARGPT-v1 has been released.

- [Jan. 2025] Our work on CoT Distillation initially completed in Feb. 2024 is accepted for ICLR 2025.

- [Dec. 2024] Our paper and code about Hallucination Mitigation for MLLMs VASparse has been released.

Publications: VLM Pre-/Post-training, RLHF, Unified Modeling

-

Working in Progress

Working in Progress

-

Preprint

Preprint arXiv (Preprint), 2025.

Preprint

Preprint arXiv (Preprint), 2025. -

Preprint

Preprint arXiv (Preprint), 2025.

Preprint

Preprint arXiv (Preprint), 2025. -

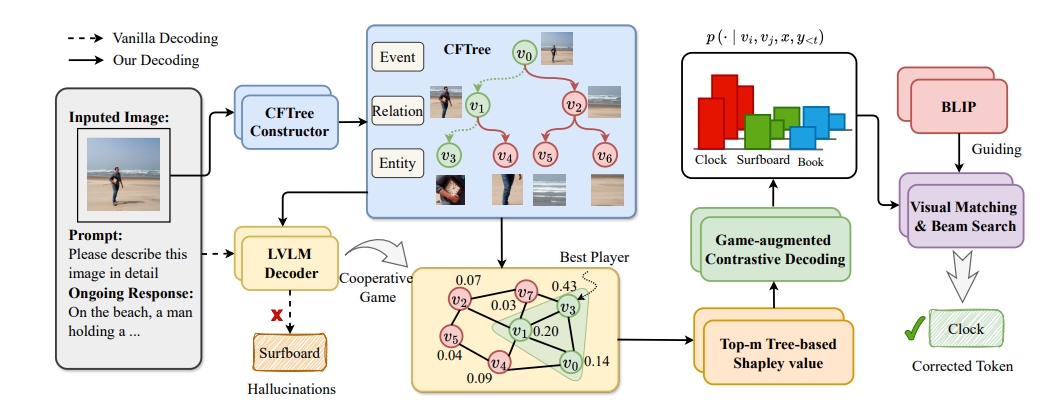

CVPR

Computer Vision and Pattern Recognition Conference (CVPR), 2025.

CVPR

Computer Vision and Pattern Recognition Conference (CVPR), 2025. -

EMNLP

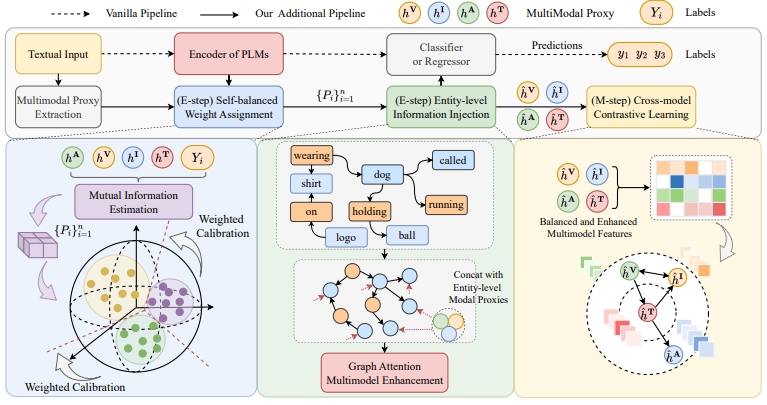

Empirical Methods in Natural Language Processing (EMNLP), 2024.

EMNLP

Empirical Methods in Natural Language Processing (EMNLP), 2024.

Publications: VL, CoT Distillation, NLU

-

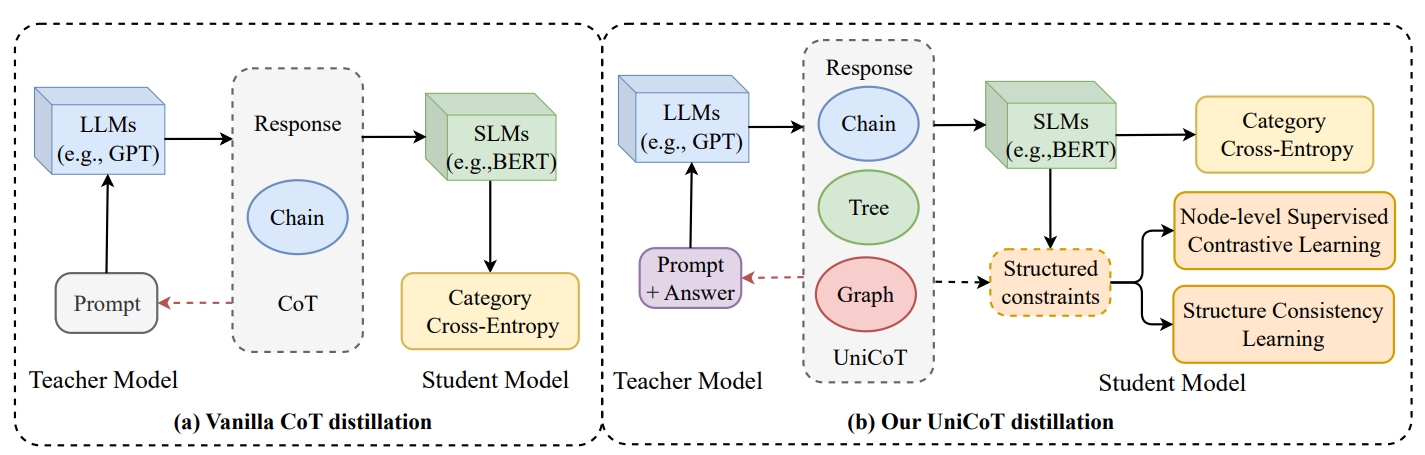

ICLR

International Conference on Learning Representations (ICLR), 2025.

ICLR

International Conference on Learning Representations (ICLR), 2025. -

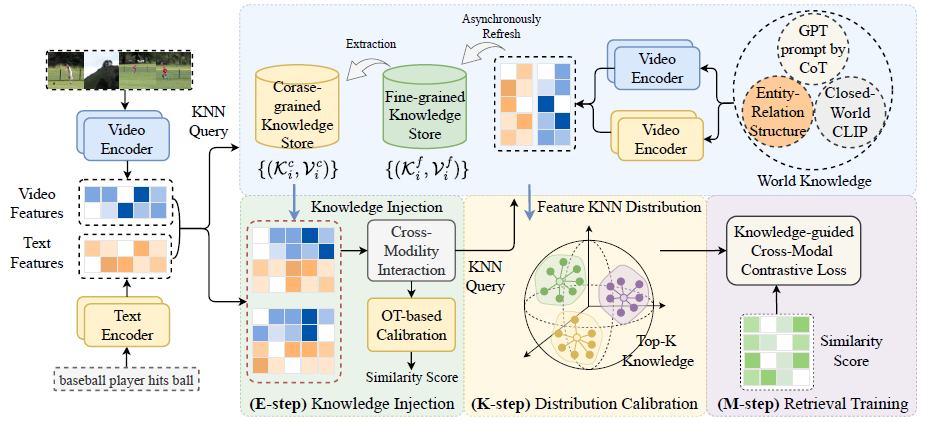

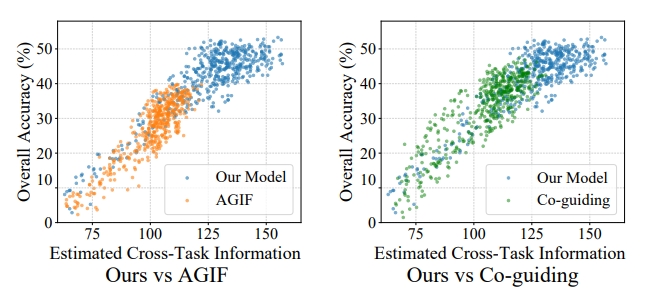

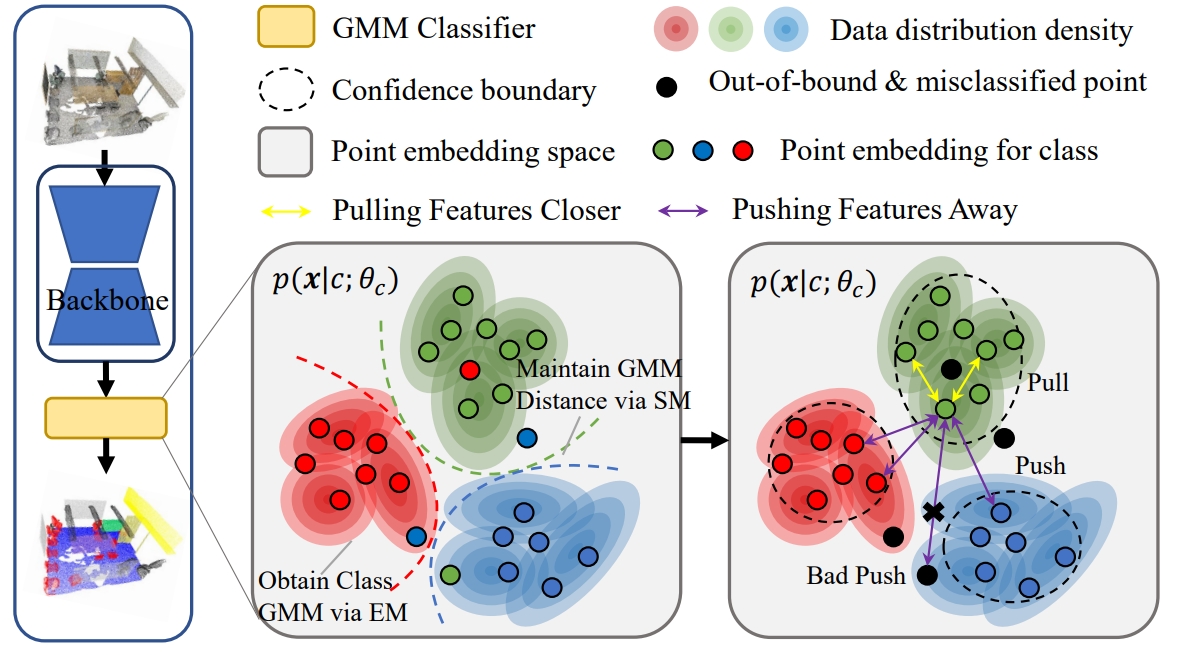

ECCV

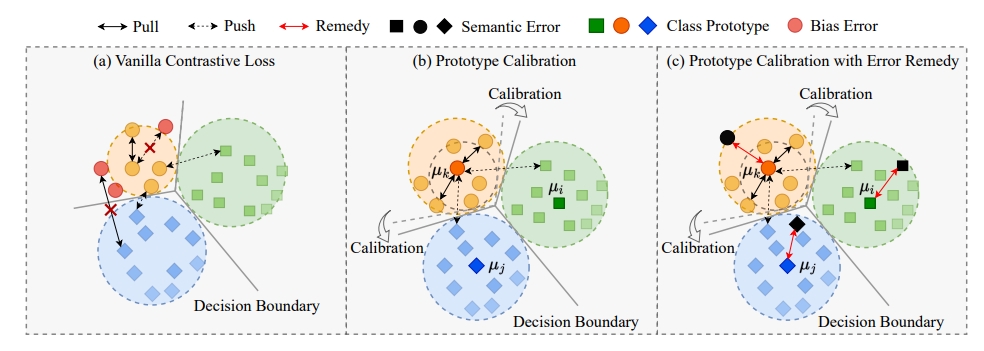

European Conference on Computer Vision (ECCV), 2024.

ECCV

European Conference on Computer Vision (ECCV), 2024. -

AAAI

Annual AAAI Conference on Artificial Intelligence (AAAI), 2024.

AAAI

Annual AAAI Conference on Artificial Intelligence (AAAI), 2024. -

ACL

Annual Meeting of the Association for Computational Linguistics (ACL), 2024.

ACL

Annual Meeting of the Association for Computational Linguistics (ACL), 2024. -

ACM MM

ACM International Conference on Multimedia (ACM MM), 2024.

ACM MM

ACM International Conference on Multimedia (ACM MM), 2024. -

Pattern Recognition

Journal of Pattern Recognition (Pattern Recognition), 2024.

Pattern Recognition

Journal of Pattern Recognition (Pattern Recognition), 2024.

Powered by Jekyll and Minimal Light theme.